This repository contains the code for the papers:

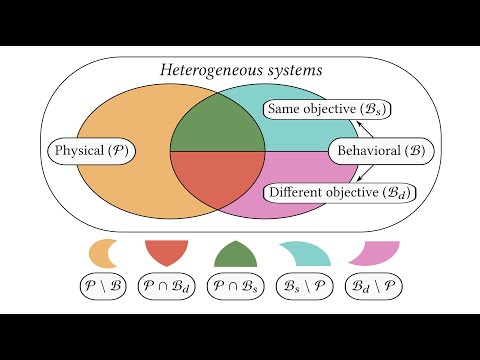

- Heterogeneous Multi-Agent Reinforcement Learning

- System Neural Diversity: Measuring Behavioral Heterogeneity in Multi-Agent Learning

If you use HetGPPO in your research, cite it using:

@inproceedings{bettini2023hetgppo,

title = {Heterogeneous Multi-Robot Reinforcement Learning},

author = {Bettini, Matteo and Shankar, Ajay and Prorok, Amanda},

year = {2023},

booktitle = {Proceedings of the 22nd International Conference on Autonomous Agents and Multiagent Systems},

publisher = {International Foundation for Autonomous Agents and Multiagent Systems},

series = {AAMAS '23}

}

If you use SND in your research, cite it using:

@article{bettini2023snd,

title={System Neural Diversity: Measuring Behavioral Heterogeneity in Multi-Agent Learning},

author={Bettini, Matteo and Shankar, Ajay and Prorok, Amanda},

journal={arXiv preprint arXiv:2305.02128},

year={2023}

}

Watch the presentation video of HetGPPO.

Watch the talk about HetGPPO.

Clone the repository using

git clone --recursive https://github.com/proroklab/HetGPPO.git

cd HetGPPOCreate a conda environment and install the dependencies

pip install "ray[rllib]"==2.1.0

pip install -r requirements.txtThe training scripts to run HetGPPO in the various VMAS scenarios can be found in the train folder.

These scripts use the HetGPPO model in models/gppo.py to train multiple agents in different scenarios. The scripts log to wandb.

You can run them with:

python train/train_flocking.pyThe hyperparameters for each script can be changed according to the user needs.

Several util tools can be found in utils.py, including the callback to compute the SND heterogeneity metric.

Several evaluation tools can be found in the evaluate folder.